It is important to remember that while AWS Global Accelerator uses IP Anycast to steer clients into the nearest edge POP, it is much more than that. In a similar way to Amazon CloudFront, when customer connections enter an edge POP, they are terminated on a proxy server. This means that the three-way handshake – the initial setup of the TCP connection – happens much faster as it’s confined to a shorter distance.

As you’ll recall from our discussion in Chapter 2, latency in the TCP handshake interplays with the receive window to artificially limit the throughput of TCP connections. This situation is not made perfect by TCP termination, but it is significantly improved:

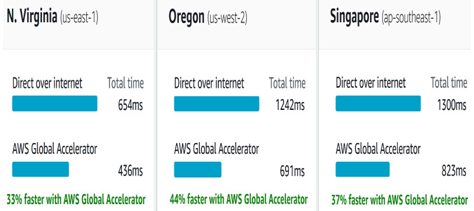

Figure 8.17 – AWS Global Accelerator Speed Comparison Tool from London, UK

To see the latency reduction and empirically test the transfer speed from different places in the world, visit the AWS Global Accelerator Speed Comparison Tool. The preceding figure shows an example of its output from a desktop in London, UK.

Endpoint groups

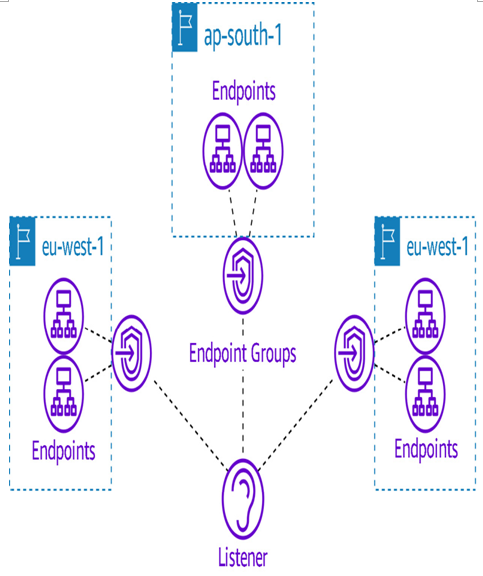

Endpoints can be thought of as similar to origins in Amazon CloudFront – they are the ultimate destination of a given connection:

Figure 8.18 – AWS Global Accelerator overview of its logical hierarchy

The types of endpoints that are supported by AWS Global Accelerator include ALBs, NLBs, and EC2 instances in regions – not in AWS Wavelength or AWS Local Zones.

Preferential distribution of connections

New connections to a listener’s Anycast IPs always enter the AWS network at the closest edge location. That’s the whole idea of IP Anycast. From there, the default behavior is for a given connection to be sent to the closest region in which an endpoint group is configured for your listener.

Consider the scenario where you’ve configured a listener with two endpoint groups – one in Virginia and one in Tokyo. Each one has an EC2 instance endpoint in separate AZs in their respective region. Now, let’s say a connection originates in Austin, TX, and enters the AWS backbone in Houston, TX. By default, the AWS Global Accelerator service in the Houston edge POP is going to send that connection to Virginia. Once it gets to Virginia, it will be routed to the EC2 instance in one of the AZs. A second connection from Dallas will go to Virginia as well, but it will get sent to the other AZ’s EC2 instance in a round-robin fashion. This is just multiple tiers of load balancing like you’re already used to with an ALB, for instance:

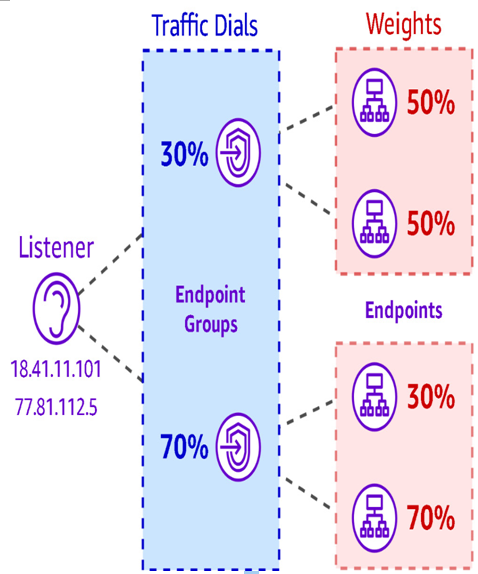

Figure 8.19 – AWS Global Accelerator traffic dials versus weights

However, there are situations where this behavior is not what we want. For example, you might want to set things up such that there is an active region and a standby region for a given application. Or maybe you wish to do blue/green swaps between application versions within a region. Perhaps you are chasing a difficult problem in a global application, and it’s not clear if the problem is due to a connection’s source or destination.

In these situations, it is possible to distribute connections arbitrarily between both regions and within them. This can be done at the regional/endpoint group level with traffic dials, or within a region at the endpoints using weights.