ECS is available in AWS Wavelength in two forms – Standard ECS on EC2 and ECS Anywhere. However, ECS on AWS Fargate is not supported.

Applications that span multiple AWS Wavelength Zones are really intended to function in a hub-and-spoke model. While it is possible through the use of multiple VPCs and AWS Transit Gateway to enable communication between a container in, say, New York City and one in Atlanta, this is usually a bad idea. It defeats the entire purpose of moving resources to such a specific location to reduce latency.

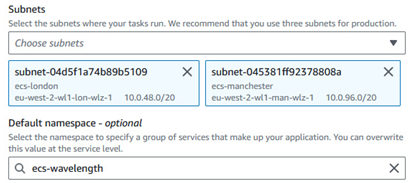

Microservice-based applications designed for orchestrated containerized environments tend to form a mesh of dependencies. The orchestration layer often does things such as ensuring two instances of a given microservice container are kept on separate physical nodes for high availability reasons. However, they generally don’t measure or consider the latency across the entire chain of dependencies as it pertains to the initial requester. In other words, if you deploy an ECS cluster and simply add all of your AWS Wavelength Zone subnets to the default capacity provider, you will end up with cross-zone communication paths that you don’t want:

Figure 7.21 – The wrong way to deploy an ECS cluster with AWS Wavelength Zones

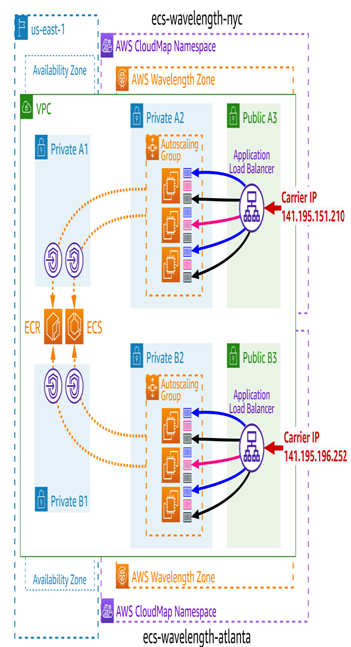

This is why it is ideal with ECS to maintain separate EC2 Auto Scaling Groups as distinct capacity providers across AWS Wavelength Zones. These capacity providers should also fall under separate namespaces in AWS Cloud Map. The next diagram shows this topology:

Figure 7.22 – ECS cluster spanning two AWS Wavelength Zones with separate namespaces

Amazon EKS

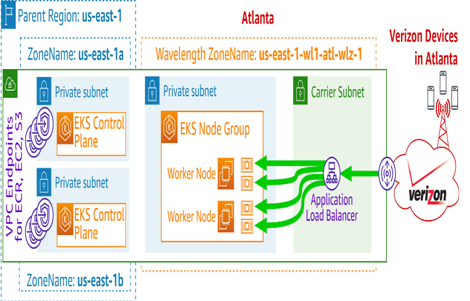

Distributed applications running on EKS are supported within AWS Wavelength Zones. However, there is only one supported deployment model. In the same way extended clusters work with AWS Outposts, the EKS control plane components must be deployed within the parent region. The next diagram shows an example of this setup

Figure 7.23 – Self-managed EKS node group in an AWS Wavelength Zone

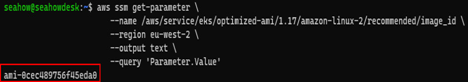

Note that you cannot deploy managed EKS node groups into AWS Wavelength Zones; only self-managed node groups are supported. This means the worker nodes are part of an Auto Scaling Group, and they run the /etc/eks/bootstrap.sh script upon launch that tells them how to join the K8s cluster directly. This script is part of the EKS Amazon Machine Image (AMI) that AWS publishes. The latest version of this AMI can be found with the following CLI command:

aws ssm get-parameter \

–name /aws/service/eks/optimized-ami/1.17/amazon-linux-2/recommended/image_id \

–region eu-west-2 \

–output text \

–query ‘Parameter.Value’

Figure 7.24 – Obtaining the ID for the latest EKS optimized AMI in eu-west-2 from SSM

Self-managed node groups for EKS

For more information, see Launching self-managed Amazon Linux 2 nodes in the Amazon EKS User Guide. Also, read Managing users or IAM roles in the Amazon EKS User Guide for information on applying the aws-auth ConfigMap to your EKS cluster.