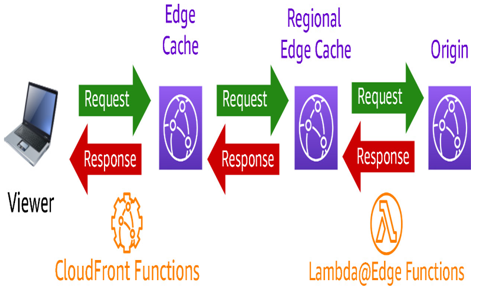

As noted previously, AWS Lambda@Edge functions execute inside the RECs. That’s better than having to run them in the core regions – but what can we do inside the edge POPs themselves? That is where we must use Amazon CloudFront functions:

Figure 8.13 – Visualization of where Amazon CloudFront functions and AWS Lambda@Edge run

These are similar to Lambda@Edge and live within the same flow of a viewer request in the context of Amazon CloudFront. However, because they run at the edge POPs themselves, they are very close to the end users. They are also very fast – their startup times are sub-milliseconds. The trade-off is that the edge POPs don’t have as many compute resources to go around, so they have to be lightweight – so lightweight that they are limited to a single millisecond for maximum duration. One way this is made possible is how they are written. Unlike the Node.js or Python runtimes supported by AWS Lambda@Edge, Amazon CloudFront functions are written in JavaScript:

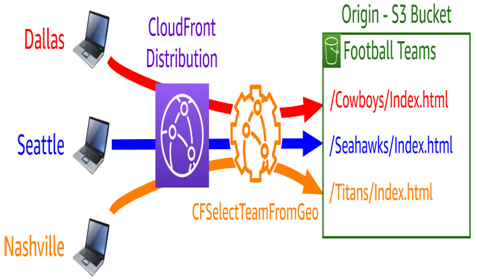

Figure 8.14 – An Amazon CloudFront function making decisions based on the user’s geographic location

These are great for simple decision-making. Let’s say that instead of outright georestricting content in our distribution, we want to steer users toward the landing page of the sports team in their area before they log into the site. This could be done using a function that looks at the CloudFront geolocation headers and modifies the request, as shown in the preceding figure.

Amazon CloudFront functions can only be associated with the viewer request/response stages. If you need to do something at the origin request or response, you will need to stick with AWS Lambda@Edge.

Leveraging IP Anycast with AWS Global Accelerator

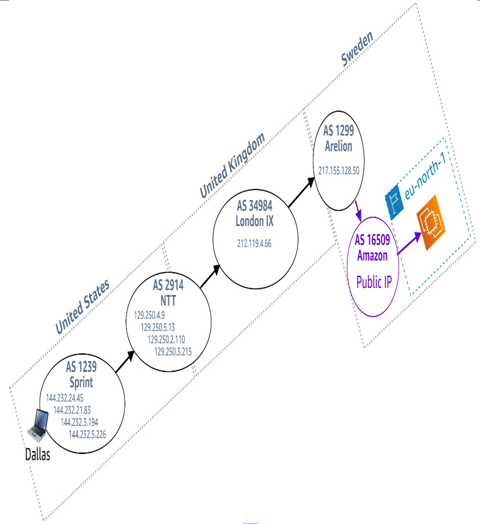

In Chapter 2, we discussed how the primary causes of packet loss on the internet are congestion or throttling at the junction of two autonomous systems along the way:

Figure 8.15 – A client in Dallas, USA, accessing an application in Stockholm, SE, via the public internet

Let’s think about an application that runs on an EC2 instance in the AWS region in Stockholm, SE. The preceding figure provides an example of the path such a connection might take from a client in Dallas, USA.

As you can see, four different autonomous systems are traversed before the packets enter the AWS network in Stockholm. This is a lot of opportunity for congestion or QoS throttling mechanisms to cause a poor user experience.

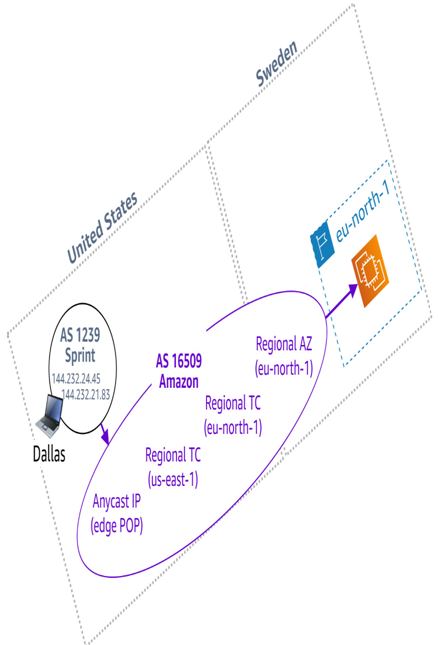

Now, let’s look at the same application, but this time with AWS Global Accelerator configured in front of it. Yes, the client in Dallas still has to physically get to Stockholm, so it’s going to be a relatively high-latency connection:

Figure 8.16 – A client in Dallas, USA accessing an application in Stockholm, SE, via AWS Global Accelerator

That said, take a look at the preceding figure to see the new path the connection takes. It enters the AWS network much earlier. This allows it to take a more direct path and one that isn’t susceptible to congestion and throttling. Even though we’ve done nothing here to bring anything closer to the user, we have stabilized the path so that their experience is highly likely to improve – possibly enough that we don’t have to distribute anything in the first place.